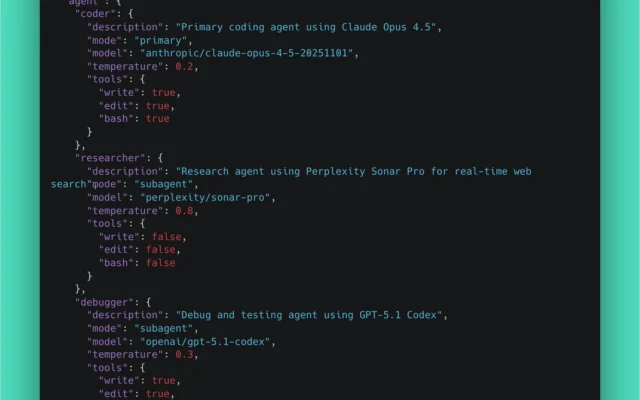

Stop using one AI model to do everything. I configured OpenCode with 3 specialized agents that work together, and my code quality...

You've built an amazing AI application locally. Now you need to deploy it. Simple, right? Except your laptop has 64GB RAM, local...

Selecting the right large language model for your production system has become increasingly complex in 2025. With dozens of proprietary and open-source...

Shipping your first LLM feature feels magical. Shipping your tenth feels terrifying. Why? Because AI has a unique failure mode: it can...

AI costs are crushing startups. One company I talked to was spending $47,000/month on LLM API calls—more than their entire engineering payroll....

Retrieval-Augmented Generation (RAG) has become the standard architecture for LLM applications that need accurate, up-to-date information. However, naive RAG implementations often fail...